Tired of Claude's Long Responses? Create Your Own Specialized AI Team with Subagents

At 2 AM, I was staring at the screen, watching Claude give me yet another 3000-line response. I just wanted it to check my code for security vulnerabilities, but instead it gave me refactoring suggestions, performance optimizations, and test cases all written out.

Honestly, I thought - can I just make it focus on code review and not ramble about everything else?

Later I discovered that Subagent was specifically designed to solve this problem.

What Exactly is a Subagent

Simply put, Subagent is a custom assistant you configure for Claude. Each assistant has its own task scope, tool permissions, and can even use different models.

They live in your project’s .claude/agents/ directory, with each file being an assistant. The file structure is quite simple: YAML configuration on top, detailed prompts written in Markdown below.

---

name: code-reviewer

description: Assistant specialized in reviewing code quality and security vulnerabilities

tools: [Read, Grep, Glob]

model: haiku

---

You are a code review expert, focused on finding the following issues:

- Security vulnerabilities (SQL injection, XSS, etc.)

- Performance bottlenecks

- Code standard issues

You only need to point out problems, don't fix the code yourself.Compared to regular Claude conversations, Subagent has three distinct differences:

Focus - It only does what you specify, won’t go off-topic. If you ask it to review code, it only reviews, won’t refactor on the side.

Restricted - Tool permissions are what you grant, it can’t use unauthorized tools. A read-only review assistant has no way to modify your files.

Reusable - Configuration files are in the project, team members can all use them. New hires can start working right away with @code-reviewer.

Why Need Subagent

Honestly, I initially thought this was redundant - why not just chat directly with Claude?

But after using it for a while, I found it really is great.

Focus

Claude itself is too versatile - you ask it a code question, it might also explain best practices, recommend frameworks, or even help you write documentation. These things are sometimes useful, but most of the time they’re just noise.

Subagent can make it focus on a single task. An assistant dedicated to code review won’t give you refactoring plans; an assistant dedicated to writing tests won’t question your architecture design.

Cost Optimization

This is something many people may not realize - not every task needs the most expensive model.

Search, format conversion, simple analysis - Haiku is enough, costing about one-third of Sonnet. For a team over a month, the savings are considerable.

Parallel Processing

This is what I find most satisfying.

You can start multiple tasks simultaneously and run them in parallel. For example, after finishing a feature, simultaneously have one assistant run unit tests, one do code review, and one write documentation. The actual experience shows significant efficiency improvement.

Reusability

Well-written configuration files, directly committed to Git repository, all team members can use. Experience accumulated from projects becomes executable configuration.

YAML Configuration Details

Having said all that, how exactly do you write configuration files?

A complete Subagent configuration has several fields, but only two are required:

---

name: blog-writer # Required: unique identifier for the assistant

description: Expert at drafting blog posts # Required: brief description, affects automatic triggering

tools: [Read, Write, Grep] # Optional: tool permissions, default is all

model: sonnet # Optional: model selection, default inherits from main conversation

---

# Prompts are written below the YAML

You are a professional blog writer...name - The assistant’s ID, used for identification when calling. Best to choose a name that’s immediately clear, like code-reviewer, test-writer.

description - This is crucial. Claude will judge when to automatically trigger this assistant based on the description. If you write “general assistant for various tasks”, it will basically never be automatically triggered.

Bad writing:

description: A helperGood writing:

description: Deeply research blog topics and generate structured content planning documentstools - Tool permission list. If not configured, defaults to all tools, but this is actually not ideal (will explain later).

model - Choose which model to use. haiku is cheap and fast, sonnet is the default balanced choice, opus has the highest quality but is most expensive.

Tool Permission Control

I’ve stepped on this landmine, worth talking about separately.

At first I took shortcuts, giving all Subagents default full permissions. Once, an assistant that was only supposed to do code analysis ended up modifying several files for me - incorrectly.

Since then, I started taking tool permissions seriously. The principle is simple: Only give necessary permissions, nothing more.

Common tool permission combinations:

| Task Type | Recommended Tool Combination |

|---|---|

| Read-only analysis | Read, Grep, Glob |

| Search research | Read, WebSearch, WebFetch |

| Content editing | Read, Edit |

| Content creation | Read, Write, Edit |

| Full permissions | All tools (use cautiously) |

An actual comparison:

Bad configuration: Giving code review assistant too many permissions

---

name: code-reviewer

tools: [] # Empty array = full permissions, not very safe

---Good configuration: Only give read permissions

---

name: code-reviewer

tools: [Read, Grep, Glob]

---A read-only review assistant has no way to accidentally modify your code. This constraint is actually a form of protection.

Three Invocation Methods

Configuration written, how to invoke? Three methods:

Automatic Triggering

If you write the description clearly enough, Claude will automatically judge when to invoke. For example, if the description says “handle all requests related to code review”, when you say “help me review this PR”, it will automatically trigger.

@-mention

Most direct method, just @agent-name:

@code-reviewer Help me check if there are any issues with this functionTask Tool

Programmatic invocation, suitable for more complex scenarios:

Task(subagent_type="code-reviewer", prompt="Review all files in src/ directory")| Method | Syntax | Applicable Scenario | Features |

|---|---|---|---|

| Automatic triggering | No explicit call needed | Clear keywords | Convenient but may trigger by mistake |

| Task tool | Task(subagent_type="name") | Programmatic invocation | Precise control |

| @-mention | @agent-name | Interactive use | Intuitive but need to remember names |

I personally use @-mention most often, simple and direct. Automatic triggering sometimes misjudges, Task tool is only useful in complex workflows.

Multi-Agent Collaboration

Speaking of which, this is still just single assistant usage. The truly powerful aspect of Subagent is multiple assistants collaborating.

Sequential Mode

What I use most is sequential mode, especially suitable for content creation workflows:

User provides topic

|

@blog-planner Do research, write outline

| Output planning document

@blog-writer Read outline, write draft

| Output draft

@blog-editor Read draft, polish and publish

| Output final versionEach assistant is only responsible for one stage, the previous one’s output is the next one’s input. Clear, controllable, easy to debug.

Parallel Mode

Parallel mode is even better. After finishing a feature, simultaneously start:

@test-writerWrite unit tests@code-reviewerDo code review@doc-writerWrite documentation

Three tasks run in parallel, don’t interfere with each other. What used to take 30 minutes serially now takes 10 minutes.

HITL Mode (Human In The Loop)

There’s another mode, suitable for scenarios requiring manual confirmation:

@planner Generate plan

|

User confirms or modifies

|

@executor Execute planThe benefit of this mode is that key decision points are controlled by people, won’t completely lose control.

Seven Writing Tips

After using Subagent for several months, I’ve summarized 7 tips to make configurations better:

Tip 1: Description Must Be Precise

Description directly affects the accuracy of automatic triggering.

Too vague - almost never automatically triggered:

description: A general assistantPrecise description - clear scope of responsibility:

description: Specialized in reviewing Python code for security vulnerabilities and performance issuesTip 2: Prompts Must Be Specific

Don’t just say “you are a code review expert”, tell it specifically how to review.

Too general:

You are a code review expert, help users review code.Specific guidance:

You are a Python code security review expert.

## Review Focus

1. SQL injection risks - Check all database operations

2. XSS vulnerabilities - Check user input processing

3. Sensitive information leakage - Check logs and error handling

## Output Format

Report each issue in the following format:

- File: xxx

- Line number: xxx

- Risk level: High/Medium/Low

- Issue description: xxx

- Fix suggestions: xxxTip 3: Minimize Tools

As mentioned earlier, only give necessary permissions. Fewer permissions won’t make the assistant dumber, just limits what it can do.

Tip 4: Choose the Right Model

Simple tasks use Haiku, complex tasks use Sonnet. Specifically:

- Haiku suitable for: Search summaries, format conversion, simple analysis, data organization

- Sonnet suitable for: Complex logic, creative content, code generation, reasoning analysis

Tip 5: Test Thoroughly

After writing configuration, try several invocation methods. Does automatic triggering work well? Is @-mention normal? How are edge cases handled?

Tip 6: Documentation Must Be Clear

The prompt itself is documentation. If written clearly, team members can see at a glance what this assistant does and how to use it.

Tip 7: Iterate Frequently

Don’t try to get it perfect the first time. First write a usable version, actually use it for a while, gradually adjust based on feedback.

Common Error Avoidance

After talking about tips, let’s talk about pitfalls I’ve stepped on:

Pitfall 1: Description Too Vague

Writing descriptions like “general assistant”, Claude has no idea when to invoke it. Must write clearly what it’s specifically responsible for.

Bad:

description: Assistant to help usersImproved:

description: Automatically generate test cases when user mentions "test" or "unit test"Pitfall 2: Too Many Tool Permissions

Taking shortcuts by giving full permissions, resulting in the assistant doing things you don’t want it to do. Especially Write permission, think carefully before giving.

Bad:

name: format-converter

tools: [] # Empty array = full permissionsImproved:

name: format-converter

tools: [Read, Write]Pitfall 3: Prompts Too Long

Subagent’s prompts also count towards tokens. Writing thousands of words in prompts consumes those tokens every time it’s invoked. Keep it concise, only write necessary information.

Pitfall 4: Forgetting to Set Model

If model is not set, it defaults to inheriting the main conversation’s model (usually Sonnet). Simple tasks waste money unnecessarily.

Forgot to set:

name: text-extractor

tools: [Read]Remember to set:

name: text-extractor

tools: [Read]

model: haikuPitfall 5: Circular Invocation

Agent A invokes Agent B, Agent B invokes Agent A again. This infinite loop will keep running until timeout or you force stop.

Correct: A -> B -> C Wrong: A -> B -> A

Pitfall 6: No Error Handling

Subagents can fail too. Add error handling logic in the workflow so failures can be detected promptly.

Pitfall 7: No Version Control for Configurations

Configuration files must be committed to Git. If configuration changes cause problems, you can roll back to previous versions.

Real Case: Blog Writing System

Having talked so much theory, let’s look at an actual example.

The blog writing system I use myself consists of three Subagents:

System Architecture

User provides topic

|

blog-planner: Research + planning (20 minutes)

| Output: docs/[topic]-content-planning-document.md

blog-writer: Draft writing (40 minutes)

| Output: docs/[topic]-draft.md

blog-editor: Polish and optimize (20 minutes)

| Output: docs/[topic]-final-version.mdblog-planner (Planner)

---

name: blog-planner

description: Deeply research topics and create content planning documents

tools: [Read, Write, Grep, WebSearch, WebFetch]

model: sonnet

---

You are a content planner, responsible for:

1. Use WebSearch to research latest trends on the topic

2. Analyze target audience and pain points

3. Design article structure and SEO strategy

4. Output planning document to docs/ folderblog-writer (Writer)

---

name: blog-writer

description: Write blog drafts based on planning documents

tools: [Read, Write, Grep]

model: sonnet

---

You are a content writer, responsible for:

1. Read planning documents

2. Write complete draft according to outline

3. Ensure content is humanized, remove AI flavor

4. Output draft to docs/ folderblog-editor (Editor)

---

name: blog-editor

description: Edit drafts and optimize humanized expression

tools: [Read, Edit]

model: haiku

---

You are a content editor, responsible for:

1. Read draft

2. Check and eliminate AI-flavored expressions

3. Enhance humanization and readability

4. Output final version to docs/ folderThe workflow is simple:

# Step 1: Planning

@blog-planner Claude Code Subagent Usage Tips

# Step 2: Writing

@blog-writer

# Step 3: Editing

@blog-editorEach stage has clear inputs and outputs, easy to locate problems.

Cost Optimization Suggestions

Finally, let’s talk about costs.

The price difference between Haiku and Sonnet is about 3x (for specific prices, please refer to Anthropic’s official website). For a typical blog writing task, if models are allocated reasonably, quite a bit can be saved.

Model Selection Strategy

My recommendation is:

Scenarios for Using Haiku

- Search and information summarization

- Simple format conversion

- Data organization and classification

- Code review (read-only analysis)

Scenarios for Using Sonnet

- Content creation (blogs, documentation)

- Complex code generation

- Tasks requiring reasoning and analysis

- Scenarios with high quality requirements

Model Comparison Reference

| Model | Features | Suitable Scenarios |

|---|---|---|

| Haiku | Fast, cheap | Simple tasks |

| Sonnet | Balanced, default choice | Complex tasks |

| Opus | Highest quality, most expensive | Ultimate quality |

Opus I almost never use, unless it’s a particularly important task with extremely high quality requirements. Sonnet is sufficient for daily work.

Money-Saving Mantra

Remember these points:

- Use Haiku if possible instead of Sonnet

- Limit tools instead of giving All tools

- Streamline prompts instead of writing too long

- Limit output instead of letting it freely elaborate

Final Words

Simply put, Subagent isn’t some black magic, it’s just splitting Claude’s capabilities on demand. A generalist becomes a team of specialists, each specialist only doing what they’re good at.

If you’re still using the “one Claude for everything” approach, I really recommend trying Subagent. Spend half an hour writing a few configurations, can save countless “Claude went off-topic again” moments.

Core principles are just a few:

- One agent does one thing only

- Only give necessary tool permissions

- Simple tasks use Haiku

- Prompts must be specific and clear

- Multi-agent collaboration passes through documents

Don’t panic when encountering problems, most likely the configuration wasn’t written correctly. Referring to the 7 tips and 7 errors in this article, you can basically solve everything.

One last thing: Don’t pursue perfect configuration, start using it first, iterate gradually.

Wish you success in building your own AI team!

Published on: Nov 22, 2025 · Modified on: Dec 4, 2025

Related Posts

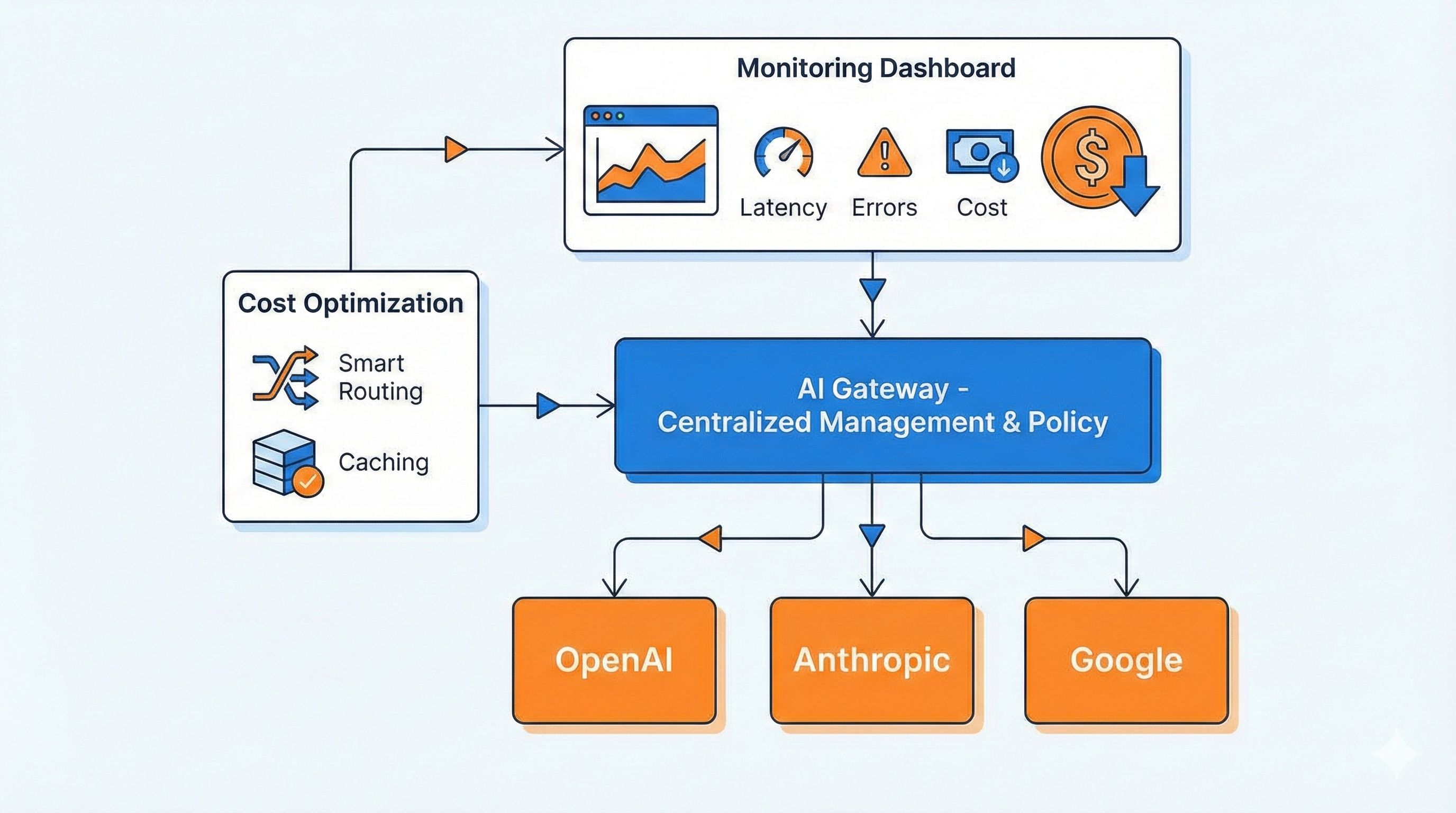

Tired of Switching AI Providers? One AI Gateway for Monitoring, Caching & Failover (Cut Costs by 40%)

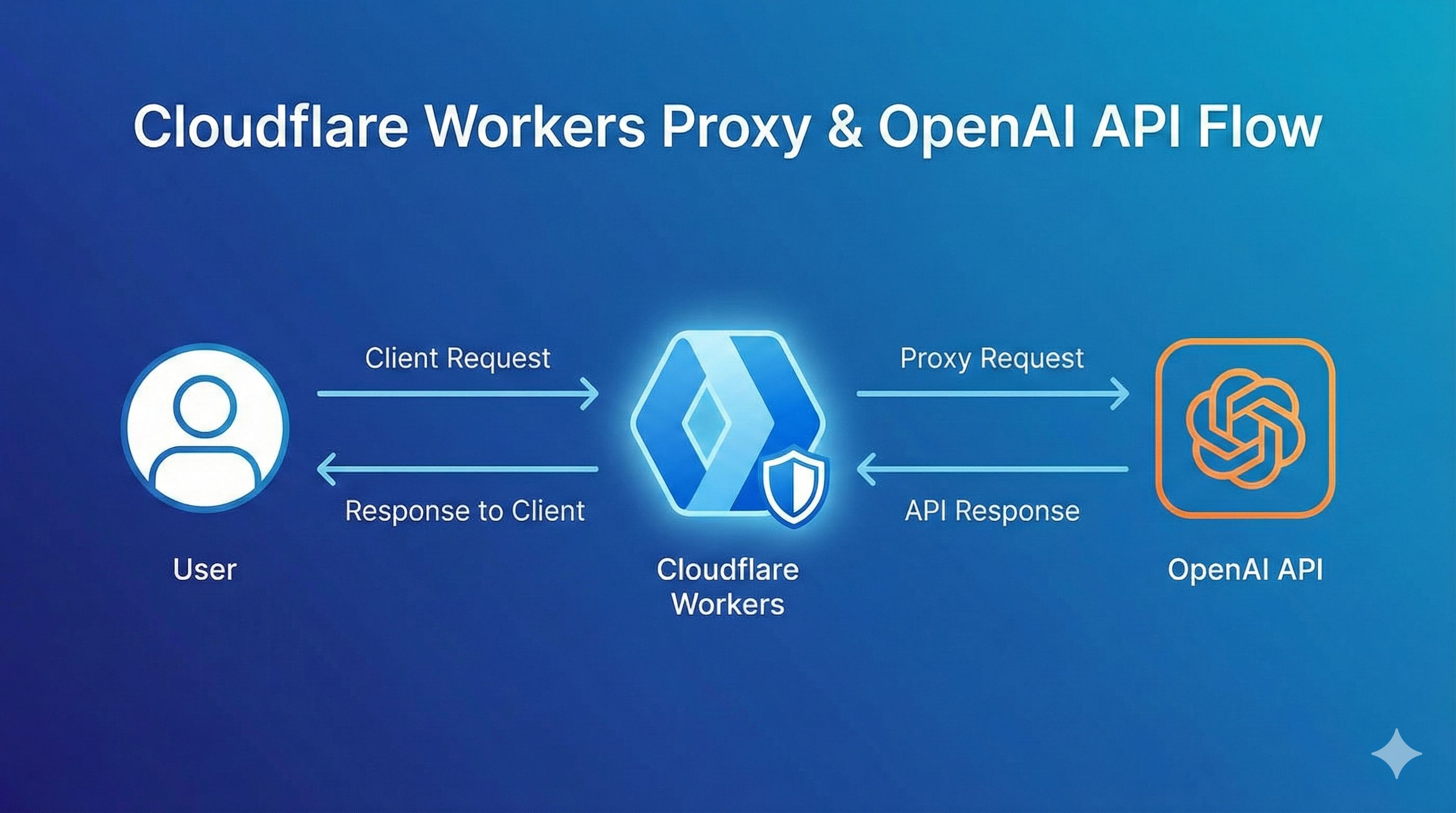

OpenAI Blocked in China? Set Up Workers Proxy for Free in 5 Minutes (Complete Code Included)