Next.js Sitemap & robots.txt Configuration Guide: Getting Your Site Indexed Faster

You’ve just launched your website with high hopes, searching for your site name on Google only to find nothing. Nothing at all.

Refreshing a few times, trying different keywords—still nothing. You open Google Search Console to check on your submitted Sitemap, and it shows “Couldn’t fetch.” Your heart sinks. This isn’t supposed to happen. Without search traffic, even the best content feels invisible.

Honestly, I’ve been there. With my first Next.js project, I followed a tutorial to set up Sitemap but Google couldn’t crawl it. I spent a week trying different approaches before discovering my robots.txt was misconfigured—it was actually blocking the entire site. I still remember that feeling of helplessness, realizing I’d made a careless mistake.

This article is my attempt to save you that same pain. I’ve compiled all the pitfalls I’ve encountered, documentation I’ve dug through, and configurations I’ve tested. I’ll explain these two files in the most straightforward way possible, give you three different Sitemap generation approaches, point out the common mistakes, and share a real failure story and how we recovered from it. If you’re dealing with unindexed pages, Sitemap errors, or uncertainty about dynamic routes, this should help you avoid a lot of unnecessary detours.

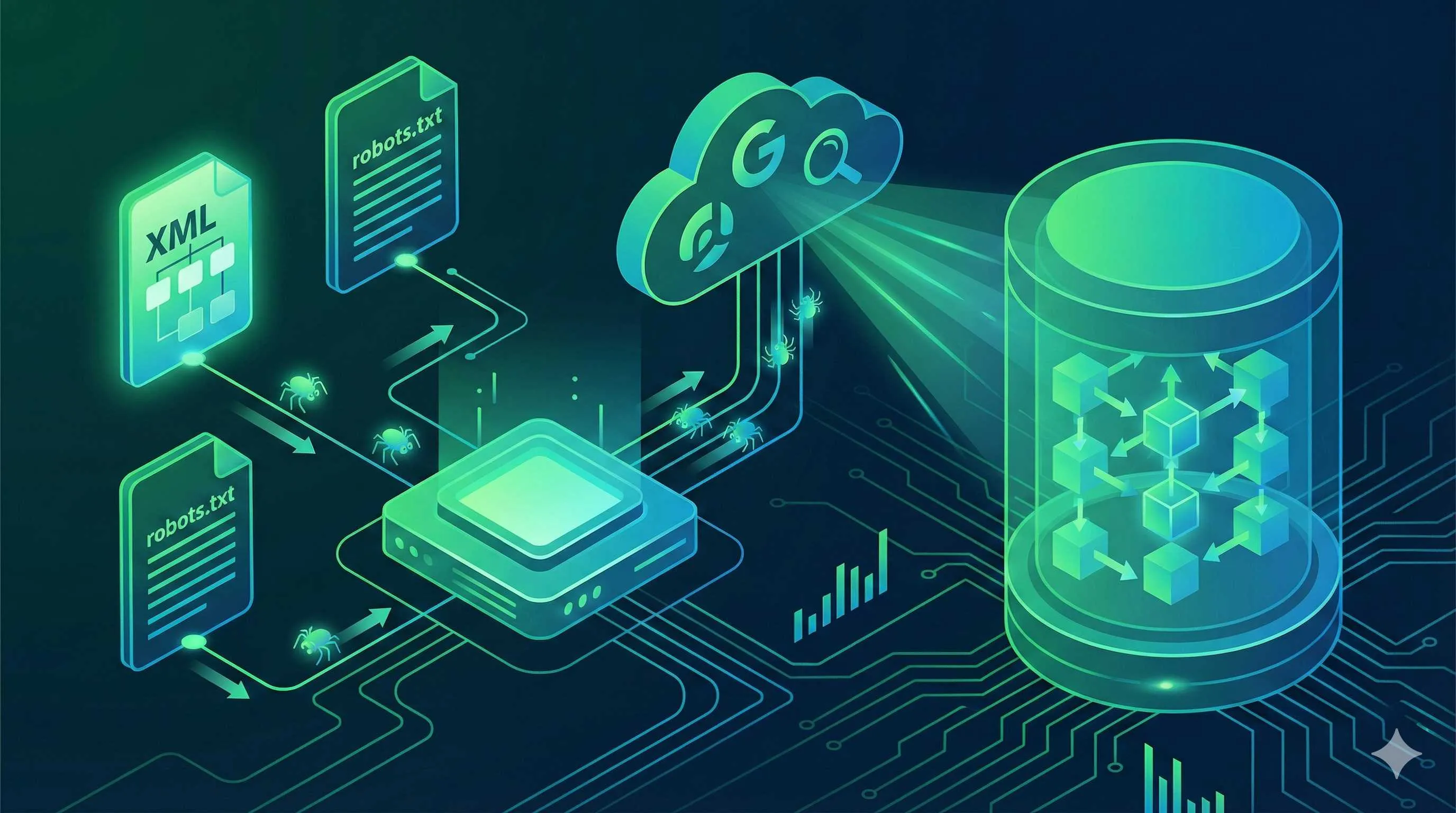

Why You Need Sitemap and robots.txt

What Sitemap Does

A Sitemap is basically a “map” for search engines, telling them what pages exist on your site, how often they update, and which ones matter most. Without one, search engines rely on their crawlers to slowly discover your pages. Those deep pages or dynamically generated content? They might take months to get crawled.

Industry data shows that sites with Sitemaps see 40% faster indexing. For new sites, the difference is even more dramatic—the difference between getting indexed in a week versus a month.

What robots.txt Does

robots.txt tells search engines “which parts you can crawl and which you can’t.” Your admin panel, API endpoints, build files—these don’t need indexing. A proper robots.txt tells crawlers to skip them so they focus their crawl budget on pages that actually matter.

Here’s something critical: having no robots.txt is better than a broken one. I’ve seen too many cases where developers accidentally blocked their entire site when trying to exclude just one directory, causing the site to vanish from Google. Always test your configuration. Test it again. Then test it once more.

Three Ways to Generate Sitemaps in Next.js

After Next.js 13+ introduced App Router, the way to generate Sitemaps changed too. I’ll walk you through three approaches, from simplest to most complex.

Method 1: App Router Native sitemap.ts

Best for: Next.js 13+ projects with a moderate number of pages (dozens to hundreds)

This is the official approach and requires zero external dependencies. Create a sitemap.ts file in your app directory:

// app/sitemap.ts

import { MetadataRoute } from 'next'

export default function sitemap(): MetadataRoute.Sitemap {

return [

{

url: 'https://yourdomain.com',

lastModified: new Date(),

changeFrequency: 'yearly',

priority: 1,

},

{

url: 'https://yourdomain.com/about',

lastModified: new Date(),

changeFrequency: 'monthly',

priority: 0.8,

},

{

url: 'https://yourdomain.com/blog',

lastModified: new Date(),

changeFrequency: 'weekly',

priority: 0.5,

},

]

}Once deployed, access https://yourdomain.com/sitemap.xml to see the generated Sitemap.

Advantages:

- Official support, rock solid

- No external dependencies

- Full TypeScript type checking

Drawbacks:

- Static pages need manual updates

- Dynamic pages require data fetching in code

Method 2: next-sitemap Package

Best for: Large projects, automation needs, or significant page counts

next-sitemap is the community’s most popular Sitemap tool with powerful features.

Installation:

npm install next-sitemapConfiguration file next-sitemap.config.js:

/** @type {import('next-sitemap').IConfig} */

module.exports = {

siteUrl: process.env.SITE_URL || 'https://yourdomain.com',

generateRobotsTxt: true, // Auto-generate robots.txt

sitemapSize: 50000, // Max 50k URLs per Sitemap

exclude: ['/admin/*', '/api/*', '/secret'], // Skip these paths

robotsTxtOptions: {

policies: [

{

userAgent: '*',

allow: '/',

disallow: ['/admin', '/api'],

},

],

additionalSitemaps: [

'https://yourdomain.com/server-sitemap.xml', // Dynamic Sitemap

],

},

}Add to your package.json:

{

"scripts": {

"build": "next build",

"postbuild": "next-sitemap"

}

}Now each time you run npm run build, Sitemap gets generated automatically.

Advantages:

- Feature-rich, supports multiple Sitemap splitting

- Auto-generates robots.txt

- Handles dynamic routes

- Multi-environment support

Drawbacks:

- Requires external dependency

- Configuration is a bit more involved

Method 3: Manual Generation with Route Handlers

Best for: Extreme customization needs, or Sitemaps that need real-time updates

Using Route Handlers in App Router:

// app/sitemap.xml/route.ts

import { NextResponse } from 'next/server'

export async function GET() {

// Fetch from database or CMS

const posts = await fetchAllPosts()

const sitemap = `<?xml version="1.0" encoding="UTF-8"?>

<urlset xmlns="http://www.sitemaps.org/schemas/sitemap/0.9">

<url>

<loc>https://yourdomain.com</loc>

<lastmod>${new Date().toISOString()}</lastmod>

<priority>1.0</priority>

</url>

${posts.map(post => `

<url>

<loc>https://yourdomain.com/blog/${post.slug}</loc>

<lastmod>${post.updatedAt}</lastmod>

<priority>0.7</priority>

</url>

`).join('')}

</urlset>`

return new NextResponse(sitemap, {

status: 200,

headers: {

'Content-Type': 'application/xml',

'Cache-Control': 'public, s-maxage=3600, stale-while-revalidate',

},

})

}Advantages:

- Complete control

- Can generate in real-time

- Support for complex logic

Drawbacks:

- You write the XML yourself

- Performance optimization is your responsibility

- Manual cache handling required

Comparison Table

| Method | Use Case | Difficulty | Flexibility | Recommendation |

|---|---|---|---|---|

| App Router Native | Small static sites | ⭐ | ⭐⭐ | ⭐⭐⭐⭐ |

| next-sitemap | Medium to large projects | ⭐⭐ | ⭐⭐⭐⭐ | ⭐⭐⭐⭐⭐ |

| Route Handler | Extreme customization | ⭐⭐⭐ | ⭐⭐⭐⭐⭐ | ⭐⭐⭐ |

I use next-sitemap for most of my projects. Set it once and forget it—the features cover what I need.

Dynamic Routes in Practice: Blog Post Sitemaps

This is the most common scenario—how do you include dynamic content like blog posts, product pages, or user profiles in your Sitemap?

Using App Router Native Approach

// app/sitemap.ts

import { MetadataRoute } from 'next'

import { getAllPosts } from '@/lib/posts'

export default async function sitemap(): MetadataRoute.Sitemap {

// Static pages

const staticPages = [

{

url: 'https://yourdomain.com',

lastModified: new Date(),

changeFrequency: 'yearly' as const,

priority: 1,

},

{

url: 'https://yourdomain.com/about',

lastModified: new Date(),

changeFrequency: 'monthly' as const,

priority: 0.8,

},

]

// Dynamically fetch all posts

const posts = await getAllPosts()

const postPages = posts.map(post => ({

url: `https://yourdomain.com/blog/${post.slug}`,

lastModified: new Date(post.updatedAt),

changeFrequency: 'weekly' as const,

priority: 0.7,

}))

return [...staticPages, ...postPages]

}

// Enable incremental static regeneration

export const revalidate = 3600 // Regenerate every hourKey points:

changeFrequencyandpriorityneedas consttype assertion- Use

export const revalidateto enable incremental static regeneration (ISR) lastModifiedshould use the article’s actual update time

Handling Massive Sites: Over 50,000 URLs

Google limits Sitemaps to 50,000 URLs each. When you exceed that, split into multiple files.

Use the generateSitemaps function:

// app/sitemap.ts

import { MetadataRoute } from 'next'

// Generate multiple Sitemaps

export async function generateSitemaps() {

const totalPosts = await getTotalPostsCount()

const sitemapsCount = Math.ceil(totalPosts / 50000)

return Array.from({ length: sitemapsCount }, (_, i) => ({

id: i,

}))

}

// Generate content for each Sitemap

export default async function sitemap({

id,

}: {

id: number

}): Promise<MetadataRoute.Sitemap> {

const start = id * 50000

const end = start + 50000

const posts = await getPosts(start, end)

return posts.map(post => ({

url: `https://yourdomain.com/blog/${post.slug}`,

lastModified: new Date(post.updatedAt),

priority: 0.7,

}))

}This generates multiple Sitemap files:

sitemap/0.xmlsitemap/1.xmlsitemap/2.xml- …and so on

Next.js automatically creates a sitemap.xml index file linking all sub-Sitemaps.

Complete robots.txt Configuration

Basic Configuration Example

The simplest robots.txt:

# Allow all crawlers to access everything

User-agent: *

Allow: /

# Specify Sitemap location

Sitemap: https://yourdomain.com/sitemap.xmlBut in real projects, you’ll want finer control:

User-agent: *

Allow: /

# Block these directories

Disallow: /_next/

Disallow: /api/

Disallow: /admin/

Disallow: /dashboard/

# Block specific file types

Disallow: /*.json$

Disallow: /*.xml$

Disallow: /*?* # URLs with query parameters

# Point to Sitemap

Sitemap: https://yourdomain.com/sitemap.xmlKey explanations:

/_next/: Next.js build files—no need for search engine crawling/api/: API endpoints shouldn’t be indexed/admin/and/dashboard/: Backend areas must stay private/*.json$: JSON files don’t need indexing- The Sitemap line is crucial: Don’t forget it, or search engines won’t know where to find your Sitemap

Dynamically Generate robots.txt in Next.js

Using robots.ts in App Router:

// app/robots.ts

import { MetadataRoute } from 'next'

export default function robots(): MetadataRoute.Robots {

const baseUrl = 'https://yourdomain.com'

// Disable all crawlers in development

if (process.env.NODE_ENV === 'development') {

return {

rules: {

userAgent: '*',

disallow: '/',

},

}

}

// Production configuration

return {

rules: [

{

userAgent: '*',

allow: '/',

disallow: [

'/_next/',

'/api/',

'/admin/',

'/dashboard/',

],

},

{

userAgent: 'GPTBot', // Block OpenAI crawler

disallow: ['/'],

},

],

sitemap: `${baseUrl}/sitemap.xml`,

}

}Benefits of environment-aware configuration:

Development and preview environments shouldn’t be indexed by search engines. Environment variables let you prevent test content from being crawled.

I want to emphasize: many developers use the same config file for both development and production. This is risky. Here’s the approach I recommend:

// app/robots.ts

import { MetadataRoute } from 'next'

export default function robots(): MetadataRoute.Robots {

const baseUrl = process.env.NEXT_PUBLIC_SITE_URL || 'https://yourdomain.com'

const isProduction = process.env.NODE_ENV === 'production'

const isDeployPreview = process.env.NEXT_PUBLIC_VERCEL_ENV === 'preview'

// Non-production: block all crawlers

if (!isProduction || isDeployPreview) {

return {

rules: {

userAgent: '*',

disallow: '/',

},

}

}

// Production only

return {

rules: [

{

userAgent: '*',

allow: '/',

disallow: [

'/_next/',

'/api/',

'/admin/',

'/dashboard/',

'/*.json$',

],

},

{

userAgent: 'GPTBot',

disallow: ['/'],

},

],

sitemap: `${baseUrl}/sitemap.xml`,

}

}This prevents both “accidentally letting search engines crawl test content” and “accidentally blocking production.”

Common Mistakes to Avoid

Mistake 1: Accidentally blocking your entire site

# ❌ Wrong

User-agent: *

Disallow: /This blocks everything! The correct approach:

# ✅ Right

User-agent: *

Allow: /

Disallow: /admin/Mistake 2: Forgetting to reference Sitemap

Many people set up Sitemap but forget to declare it in robots.txt, leaving search engines unaware of where to find it.

# ❌ Missing this line

Sitemap: https://yourdomain.com/sitemap.xmlMistake 3: Path formatting errors

# ❌ Wrong: path doesn't start with /

Disallow: _next/

# ✅ Right: paths must start with /

Disallow: /_next/Mistake 4: Over-restriction

# ❌ Over-restrictive: blocking images

Disallow: /*.jpg$

Disallow: /*.png$Images are part of your content. Let search engines crawl them unless you have a specific reason not to.

Testing approach:

- Visit

https://yourdomain.com/robots.txtto verify content - Use Google Search Console’s robots.txt testing tool

- Test whether specific URLs are allowed to crawl

Google Search Console Integration & Verification

After configuring Sitemap and robots.txt, submit them to Google Search Console so Google can discover and index your site more quickly.

Adding Your Site to Search Console

- Visit Google Search Console

- Click “Add property”

- Choose “Domain” or “URL prefix” verification

I recommend DNS verification:

- Add a TXT record at your domain registrar

- Wait a few minutes for DNS to propagate

- Return to Search Console and click verify

Or use HTML file verification:

Place a verification file Google provides (like google1234567890abcdef.html) in your public directory.

Submitting Your Sitemap

After verification:

- Click “Sitemaps” in the left menu

- Enter your Sitemap URL:

sitemap.xml - Click “Submit”

Timeline expectations:

- Google won’t process immediately after submission

- Usually starts crawling within 1-7 days

- Monitor progress in Search Console

Troubleshooting Common Issues

Issue: “Couldn’t fetch Sitemap”

This is the most common problem. Possible causes:

Cause 1: Middleware is blocking Googlebot

If you use Next.js Middleware for authentication, it might block Googlebot too.

Solution:

// middleware.ts

import { NextResponse } from 'next/server'

import type { NextRequest } from 'next/server'

export function middleware(request: NextRequest) {

const { pathname } = request.nextUrl

const userAgent = request.headers.get('user-agent') || ''

// Identify search engine crawlers

const isBot = /googlebot|bingbot|slurp|duckduckbot|baiduspider|yandexbot/i.test(

userAgent

)

// Sitemap and robots.txt must be accessible to everyone, including crawlers

if (

pathname === '/robots.txt' ||

pathname === '/sitemap.xml' ||

pathname.startsWith('/sitemap-')

) {

return NextResponse.next()

}

// Allow crawlers through

if (isBot) {

return NextResponse.next()

}

// Regular user authentication logic

const token = request.cookies.get('session-token')

if (!token && pathname.startsWith('/dashboard')) {

return NextResponse.redirect(new URL('/login', request.url))

}

return NextResponse.next()

}

export const config = {

matcher: ['/((?!_next/static|_next/image|favicon.ico).*)'],

}This ensures crawlers can access Sitemap and robots.txt while your authentication still works for regular users.

Cause 2: Caching issues

Google Search Console caches failed fetch attempts. Even after fixing the problem, it might still show “Couldn’t fetch.”

Solution:

- Append a timestamp parameter to Sitemap URL:

sitemap.xml?v=20231220 - Wait a few days for Google to retry

- Or delete the old Sitemap submission and resubmit

Cause 3: Invalid XML format

Verify your Sitemap has correct XML formatting:

<?xml version="1.0" encoding="UTF-8"?>

<urlset xmlns="http://www.sitemaps.org/schemas/sitemap/0.9">

<url>

<loc>https://yourdomain.com</loc>

<lastmod>2024-12-20</lastmod>

</url>

</urlset>Important:

- Start with

<?xml, not<xml lastmodmust be ISO 8601 format (YYYY-MM-DD or full timestamp)

Use an online XML validation tool to check formatting.

Issue: “Submitted but not indexed”

Sitemap was submitted successfully, but pages still aren’t indexed. Possible causes:

- robots.txt is blocking pages: Check for misconfiguration

- Page quality issues: Too short, duplicate content, or considered low-quality

- New site: New domains need time to build trust

- No backlinks: Sites with zero external links struggle to get indexed

Solutions:

- Use Google Search Console’s “URL Inspection” tool to see how Google views your pages

- Check pages for

noindexmeta tags - Ensure pages have substantial content (at least 300 words)

- Try acquiring some backlinks

Monitoring Indexing Status

After submission, regularly check progress:

- Coverage report: See which pages are indexed and which have issues

- Index stats: Total number of indexed pages

- Sitemap status: Whether Google is successfully reading Sitemap

If pages aren’t indexed, use the URL Inspection tool to request re-crawling.

Real Story: My Biggest Mistakes

Let me share a real experience. Last year I took over an e-commerce site that had been live for 3 months but had zero search visibility.

I immediately checked robots.txt:

User-agent: *

Disallow: /The entire site was blocked. Worse, it had been like this for 3 months. When I asked the deployment team about it, they said “that wasn’t me.” Eventually we found out a temporary developer had used the same configuration across all environments to prevent test sites from being indexed—including production.

The recovery was brutal. I deleted the broken robots.txt, generated a proper Sitemap, submitted it to Google Search Console, and then…waited. After a week, the site started appearing in search results.

That incident taught me a valuable habit: I check Sitemap and robots.txt before every deployment, and I always use different configs for different environments. Development gets one set, production gets another.

I also had a Middleware issue once. I added JWT authentication middleware to the site and accidentally blocked Googlebot. Search Console kept reporting “Couldn’t fetch Sitemap,” and I spent way too long thinking it was the Sitemap format before realizing it was the Middleware causing problems.

SEO seems simple on the surface, but details matter tremendously. One configuration mistake can make your site disappear from search results.

Troubleshooting & Optimization

Pre-Deployment Checklist

Before shipping, check everything:

- Sitemap is accessible (

https://yourdomain.com/sitemap.xml) - Sitemap XML format is valid

- robots.txt is accessible (

https://yourdomain.com/robots.txt) - robots.txt doesn’t block important pages

- robots.txt includes Sitemap reference

- Sitemap includes all important pages

- Dynamic pages update Sitemap automatically

- Search Console has verified site ownership

- Sitemap submitted to Search Console

- Middleware doesn’t block crawler access

Performance Optimization

Sitemap Caching Strategy

If you generate Sitemap via API routes, add caching:

// app/sitemap.xml/route.ts

export const revalidate = 3600 // 1-hour cache

export async function GET() {

// ...generate Sitemap

return new NextResponse(sitemap, {

headers: {

'Content-Type': 'application/xml',

'Cache-Control': 'public, s-maxage=3600, stale-while-revalidate',

},

})

}Incremental vs Full Rebuild

- Small sites (< 1,000 pages): Full rebuild every time—simpler

- Medium sites (1,000-10,000 pages): Use ISR, revalidate hourly or daily

- Large sites (> 10,000 pages): Split multiple Sitemaps, incrementally update changes

CDN Configuration

If you’re using Cloudflare or similar, ensure Sitemap and robots.txt are cached:

- Set appropriate Cache-Control headers

- Allow CDN to cache XML and TXT files

- Purge cache when content updates

Wrapping Up

Let me recap the whole process:

Choose your Sitemap generation approach:

- Small projects: use App Router native

- Medium/large projects: use next-sitemap

- Need extreme customization: use Route Handlers

Configure robots.txt:

- Block directories you don’t want indexed

- Include Sitemap reference

- Block crawlers in development

Submit to Google Search Console:

- Verify site ownership

- Submit Sitemap

- Monitor indexing progress

Handle common issues:

- Don’t let Middleware block crawlers

- Verify XML format

- Use timestamps to bypass caching issues

Honestly, configuring Sitemap and robots.txt isn’t rocket science, but there are countless details. I made serious mistakes when I first set things up—my entire site was blocked by robots.txt for a month before I realized it.

Now with every new project launch, I work through this checklist and rarely encounter problems. I hope this article helps you avoid the same pitfalls and gets your site indexed quickly.

Have you run into other issues, or do you have experiences to share? Drop a comment below!

FAQ

Is Sitemap required?

Especially for dynamically generated pages, without Sitemap they might not be crawled for months.

How do I generate Sitemap for dynamic routes?

Example: const posts = await getPosts(); return posts.map(post => ({ url: `/posts/${post.id}`, ... }))

Ensure all dynamic pages that need indexing are included.

What happens if robots.txt is misconfigured?

This is one of the most common mistakes. Ensure robots.txt is correctly configured, only blocking paths that don't need indexing (like /api/, /admin/).

How long until Google crawls Sitemap after submission?

Recommendations:

1) Submit Sitemap to Google Search Console

2) Use "Request Indexing" feature

3) Keep content updated

4) Be patient

How do I verify Sitemap is correct?

1) Browser directly access /sitemap.xml to check format

2) Use online XML validator

3) Submit in Google Search Console and check status

4) Check if all important pages are included

5) Confirm URL format is correct (use absolute paths)

Can robots.txt block specific crawlers?

Example: rules: [{ userAgent: 'Googlebot', allow: '/' }, { userAgent: 'Baiduspider', disallow: '/' }]

This allows Google to crawl but blocks Baidu.

Does Sitemap need to include all pages?

1) All pages that need indexing

2) Dynamically generated pages

3) Deep pages (not easily discovered by crawlers)

Don't need to include: 404 pages, login pages, admin panels, etc.

10 min read · Published on: Dec 20, 2025 · Modified on: Jan 22, 2026

Related Posts

Next.js E-commerce in Practice: Complete Guide to Shopping Cart and Stripe Payment Implementation

Next.js E-commerce in Practice: Complete Guide to Shopping Cart and Stripe Payment Implementation

Complete Guide to Next.js File Upload: S3/Qiniu Cloud Presigned URL Direct Upload

Complete Guide to Next.js File Upload: S3/Qiniu Cloud Presigned URL Direct Upload

Next.js Unit Testing Guide: Complete Jest + React Testing Library Setup

Comments

Sign in with GitHub to leave a comment